Control how your organization

uses Claude.

30 people. 30 different Claude setups. No visibility. No standards. No enforcement. systemprompt.io is the governance layer that fixes this.

Personal free tier for individuals. Enterprise pricing on request.

Works with

One policy. Every session.

Define how Claude behaves across your organization. Skills, permissions, and rules enforced consistently for every team member, every session, every time.

- Standardized Claude behavior across every team

- Role-based permissions and access control

- Policy enforcement on every tool call

- Works across Cowork, Code, and Desktop

- Update once, sync to the entire organization

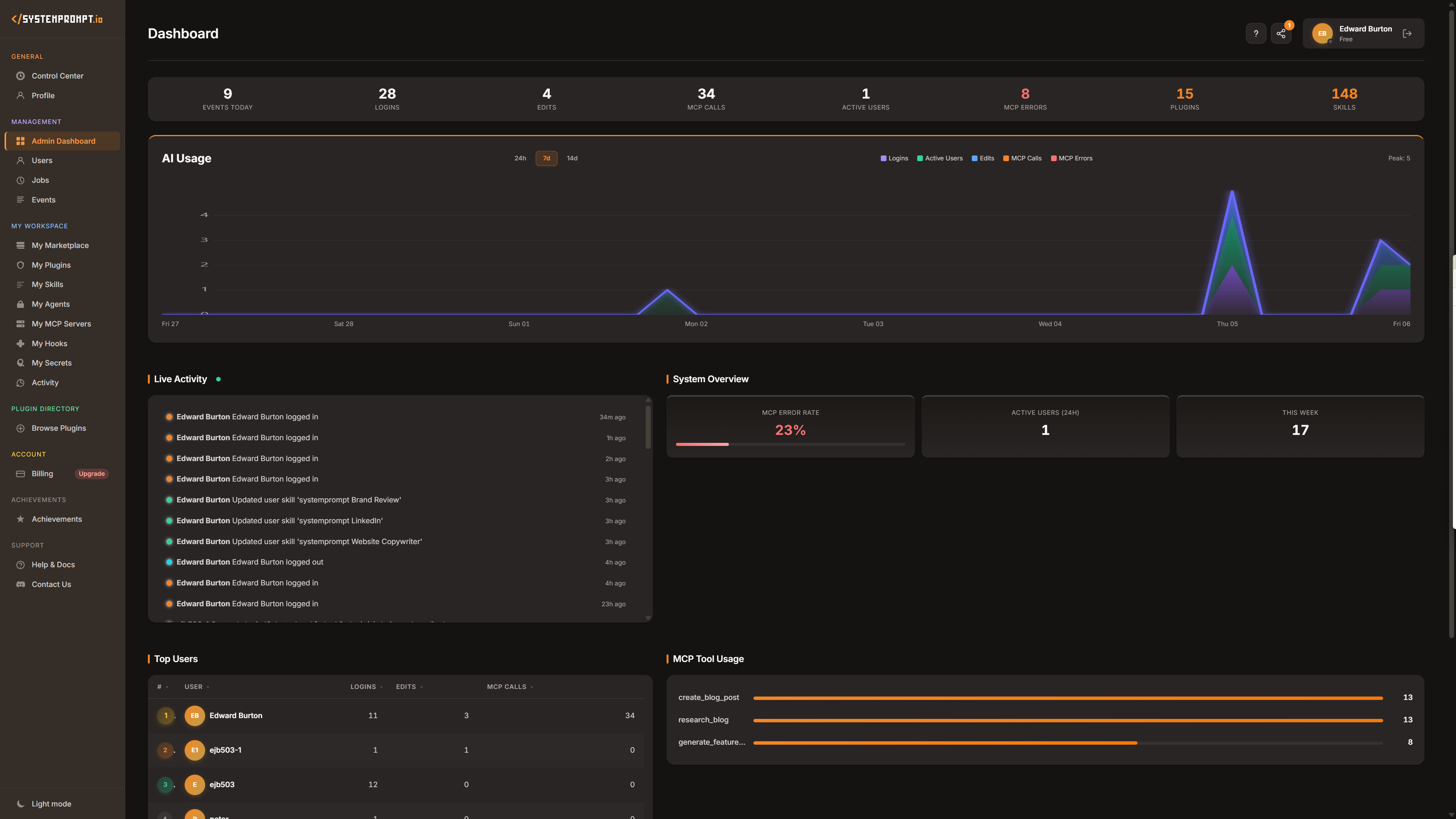

Full visibility. No blind spots.

Know what AI you have deployed, how it is being used, and where. Usage analytics, audit trails, and session observability from a single dashboard.

- Usage analytics across your organization

- Complete audit trail for compliance

- Session-level observability

- Tool usage and permission tracking

- Export and reporting for leadership

Your brand. Your infrastructure.

Self-host or deploy under your own brand. White-label AI governance for SaaS companies that need to offer it to their customers without building it.

- Self-hosted deployment option

- White-label under your own brand

- SSO/SAML integration

- Full data sovereignty

- Custom development and SLA

Deploy governance in minutes

Not months. Not quarters. Minutes.

Connect to Claude

Add your systemprompt.io workspace to Claude. One URL connects your entire organization to centralized governance.

Define your standards

Set the skills, rules, and permissions your organization needs. Our guided setup builds governance policies from your existing processes.

.git) or a direct link to a marketplace.json file.Enforce across every team

Every team member gets the same governed Claude. Same knowledge, same tools, same policies. You control what changes and when.

Governance that fits your domain

Pre-built skill templates for regulated industries and common business functions. Customize for your organization or build from scratch.

Sales

Pipeline analysis, outreach standards, and CRM-connected workflows governed by your playbook.

→Customer Support

Ticket triage, response standards, escalation rules, and knowledge base policies enforced consistently.

→Product Management

Specs, roadmaps, and research synthesis governed by your product process and templates.

→Marketing

Brand voice enforcement, content standards, and campaign workflows your whole team follows.

→Legal

Contract review standards, compliance checks, and risk assessment governed by your legal team's rules.

→Finance

Financial analysis, reconciliation, and reporting governed by your accounting standards.

→Data

Query standards, analysis templates, and data validation rules enforced across your analytics team.

→Engineering

Code review standards, architecture decisions, and deployment procedures that persist across sessions.

→Operations

Process standards, vendor management, and compliance workflows governed centrally.

→What CTOs ask us

"Won't Anthropic just build this?"

Anthropic builds model capabilities, not enterprise governance infrastructure. They expand context windows, add tool integrations, open new surfaces. Governance is a different market segment entirely.

Platform providers move up the stack. Governance layers remain distinct. The same pattern played out with AWS and Terraform, Salesforce and MuleSoft, GitHub and CI/CD platforms.

"We have developers. Why not build it ourselves?"

You could. But Anthropic ships new capabilities, plugin architectures, and features every few weeks. An in-house governance solution requires a dedicated team tracking every change, rewriting integrations, and maintaining compatibility indefinitely.

Realistic timeline: 6 to 18 months to production. Then permanent maintenance as the AI landscape evolves underneath you. By the time you ship it, you will need to rebuild it.

"Does this only work with Claude?"

No. systemprompt.io is provider-agnostic. The platform supports Anthropic, OpenAI, Google, and local models through a unified governance layer. Skills, agents, hooks, and governance rules work across providers.

Switching providers requires configuration changes, not rewrites. Your governance policies, access controls, and audit trails remain intact regardless of which model backend you use.

"What happens if systemprompt.io goes away?"

You run the code on your infrastructure. This is not SaaS that disappears when a vendor shuts down. Source code is auditable under BSL-1.1. Skills and agents are stored as portable YAML and Markdown.

Your running instance, your source code, your data formats. All standard, all forkable. The actual risk is security patches and MCP spec updates, both contractually addressable.

Frequently asked questions

What is AI governance and why does my organization need it?

AI governance is the practice of controlling how AI is used across your organization. Without it, every team member uses Claude differently, with different context, different quality, and no visibility into what is happening. You cannot enforce standards, share what works, or audit anything. systemprompt.io provides the governance layer: standardized skills, permissions, observability, and enforcement across every Claude session in your organization.

How is this different from just using Claude directly?

Claude without governance is a different tool for every person who uses it. No shared knowledge, no consistent behavior, no visibility for leadership. systemprompt.io sits between your organization and Claude, ensuring every team member operates with the same standards, the same approved tools, and the same policies. You also get full observability: who is using what, how often, and with what results.

Won't Anthropic just build governance features into Claude Enterprise?

Anthropic focuses on expanding model capabilities: context windows, tool integrations, new surfaces. Claude Enterprise and Cowork add some compliance features, but they only govern Claude specifically. They cannot govern multiple AI providers, run on your servers with full data sovereignty, manage custom MCP servers for internal systems, or provide organization-wide governance across providers. Platform providers move up the stack. Governance and integration layers remain a distinct product category.

We have developers. Why not build AI governance in-house?

This is the strongest objection and it deserves a honest answer. Building governance in-house is coherent if you view governance logic as core IP. Most enterprises view it as plumbing. The real cost is not building it, it is maintaining it. Anthropic ships new capabilities every few weeks. Your governance layer needs to evolve with them, or it becomes a liability. Realistic timeline: 6 to 18 months to production, then a permanent maintenance burden. systemprompt absorbs that complexity so your engineers can focus on your actual product.

Where does our data go?

systemprompt.io is a library running on your infrastructure, not a SaaS destination. Data flows through your servers to your configured AI providers. systemprompt is the router, not a data store. Source code is auditable under BSL-1.1. Enterprise customers self-host for complete data sovereignty. Nothing leaves your network unless you configure it to.

Can this scale to our organization?

Access control operates through role-based permissions, matching natural organizational structure. User count scales with PostgreSQL. Concurrent sessions depend on provider rate limits, not platform architecture. Plugin distribution uses an org-level marketplace with a fork-on-write model that isolates customizations. Onboarding new teams requires intentional configuration, which is by design. Governance should be deliberate, not accidental.

Does this only work with Anthropic and Claude?

No. systemprompt.io is provider-agnostic with supported patterns for Anthropic (Claude Opus, Sonnet, Haiku), OpenAI (GPT-4o, o-series), Google (Gemini), and local models (Ollama, LM Studio). Switching providers requires configuration updates. Skills, agents, hooks, and governance rules remain untouched. The actual switching cost is prompt engineering investment for specific models, not the platform itself.

Take control of how your organization uses Claude.

Book a 15-minute demo. No commitment. Or try the free personal tier.

Book a Demo